LEADING SEMANTIC SOFTWARE PACKAGE

Software Solutions

Loosely coupled components

No single bottleneck service for all use cases and teams

High availability

Highest guaranteed uptime and reliability with load-balanced processes

Horizontal/Vertical scaling

Meet growing demand by adding more machines or increasing the capacity of existing machines

Fully dockerized software packages

Rapid deployments for minimal maintenance downtime

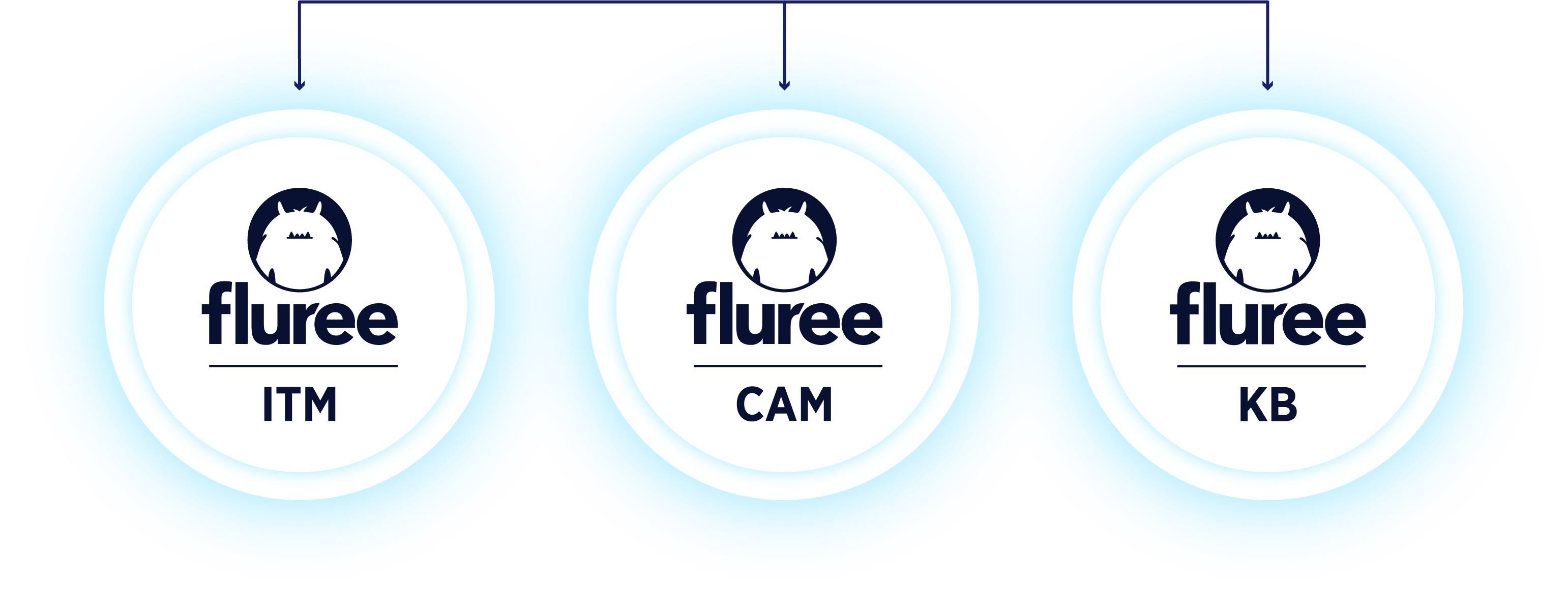

Intelligent Taxonomy Manager

Centrally manage, maintain and integrate enterprise data with content-centric applications.

Content Auto-Tagging Manager

Auto-tag content with AI based on your data, and extract new meaningful data from content.

Knowledge Browser

Publish enterprise data via a web-based portal combining search with graphical visualizations.